I recently had a discussion with a Twitter connection regarding the ease and simplicity in which VMware vSAN can be enabled. It is so simply in fact, that it’s probably quicker than most client application installs. Granted, this discussion assumed that all hardware was already in place, had been racked and cabled, and all hosts had been added to a pre-existing vCenter Server. But the point still stands – enabling vSAN can be completed in 10 clicks. Yup, 10. No, honest….just keep reading.

I’ve been playing with VMware vSAN for over a year, however, following the Twitter discussion I realised I’ve not posted about it. With the ever-increasing functionality and integration of vSAN and NSX, it’s about time I put pen to paper (or fingers to keyboard).

In this first post of a new VMware vSAN series, we’ll begin by enabling vSAN. I know there are a multitude of blog posts out there detailing this procedure, but for continuity, you’ll have to put up with another. I will only focus on the vSAN stack itself (I won’t be covering any physical server manufacturers or models). Future posts in this series will focus on storage policies, rules, and tags; deduplication, compression, and encryption; fault domains, and a number of troubleshooting guides.

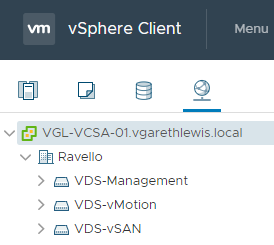

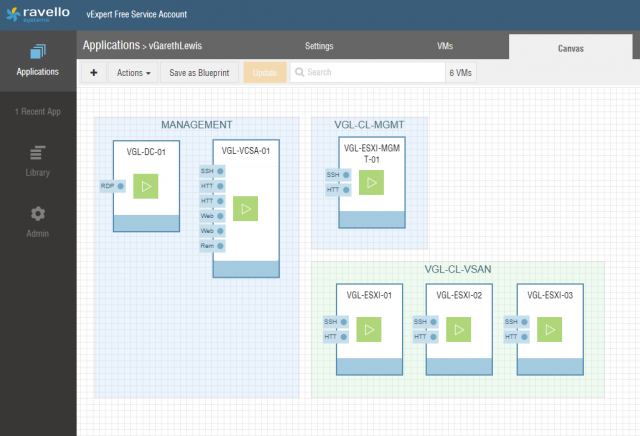

For reference, the vSAN environment detailed in this post is hosted on Oracle Cloud Infrastructure via Ravello Systems which, as a VMware vExpert, I am afforded 1,000 free CPU hours per month.

Pre-Requisites & Pre-Reading

Before we jump into enabling vSAN, I would advise a little pre-reading. Please do visit the VMware Docs Hardware Requirements for vSAN article for a full and detailed list of hardware requirements. A number of the key points are detailed below:

Cluster Requirements

- The vSAN cluster must contain a minimum of three ESXi hosts and must contribute local storage.

- Hosts residing in a vSAN cluster must not participate in other clusters.

Networking Requirements

- Hybrid Configurations – Minimum one 1 Gbps physical NIC (per host) dedicated to vSAN.

- All Flash Configurations – Minimum one 10 Gbps physical NIC (per host) dedicated to vSAN.

- All hosts must be connected to a Layer 2 or Layer 3 network.

- Each ESXi host in the cluster must have a dedicated VMkernel port, regardless of whether it contributes to storage.

Storage Requirements

- Cache Tier:

- Minimum one SAS or SATA solid-state drive (SSD) or PCIe flash device.

- Capacity Tier:

- Hybrid Configurations – Minimum one SAS or NL-SAS magnetic disk.

- All Flash Configurations – Minimum one SAS or SATA solid-state (SSD) or a PCIe flash device.

- A SAS or SATA HBA, or a RAID controller set up in non-RAID/pass-through or RAID 0 mode.

- Flash Boot Devices: When booting a vSAN 6.0 enabled ESXi host from a USB or SD card, the size of the disk must be at least 4 GB. When booting a vSAN host from a SATADOM device, you must use a Single-Level Cell (SLC) device and the size of the boot device must be at least 16 GB.

Ravello Topology

A simple enough topology – a single domain controller, vCenter Server Appliance, and a three-node VMware vSAN cluster. You’ll note a single-node Management cluster in the below diagram also. This houses NSX appliances and will not interact with our vSAN cluster in any way.

I won’t focus on the Ravello setup and configuration in this post, however, if this is something of interest, please let me know.

For those of you who are interested in running the vCenter Server Appliance on bare metal Oracle Ravello, please take a look at Ian Sanderson‘s great post (Deploy VMware VCSA 6.5 onto bare metal Oracle Ravello). The post details the process in full, and covers a number of gotchas. Note, Ian’s article (although targeting VMware vCenter 6.5) also works flawlessly with vCenter 6.7 Update 1.

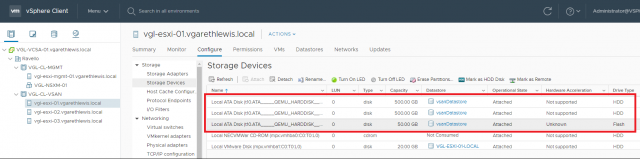

ESXi/vSAN Node Disk Configuration

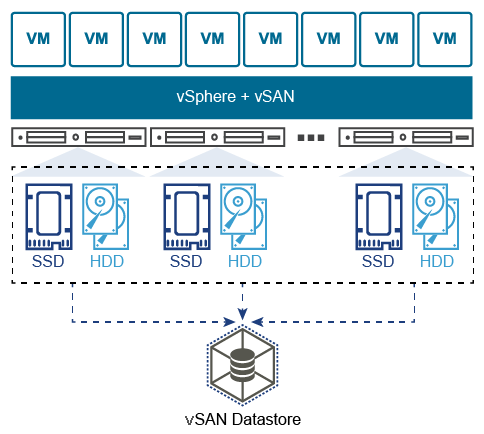

Before we detail the disk configuration, let’s talk disk tiers. If you are new to vSAN, it’s important to note the two types of disk – Cache and Capacity. As the names might imply, the cache tier is utilised to stage and process live/hot data. This data is then off-loaded and saved to the capacity tier once no longer in use (becomes cold). Data can be (and is) staged/de-staged between tiers constantly, as and when the data is requested. As you can imagine, there is a high I/O requirement for cache disks and, as such, SSDs are recommended. Check out the great VMware Docs article, Design Considerations for Flash Caching Devices in vSAN.

Each vSAN node in our lab example has been configured with four disks:

- ESXi – 20GB

- vSAN Capacity – 500GB HDD

- vSAN Capacity – 500GB HDD

- vSAN Cache – 50GB SSD

In my lab environment all cache tier disks have been marked as SSDs (see below screenshot). More disks are preferable in a production environment, however, for a lab, the below configuration is just fine.

Network Configuration

Conforming to the vSAN Network Requirements, a dedicated vSAN network has been created. Management and vMotion services have also been assigned to a dedicated network segment. Each vSAN node has been assigned six NICs (two per service), all of which have been configured as uplinks within the appropriate Virtual Distributed Switches. For information, both vSAN and vMotion VDSs have been configured to allow jumbo frames.

- 2x Management – 10.0.0.0/24

- 2x vMotion – 10.0.1.0/24

- 2x vSAN – 10.0.2.0/24

The IP configuration for each vSAN node is detailed below:

| VGL-ESXI-01 | VGL-ESXI-02 | VGL-ESXI-03 | |

| Management | 10.0.0.111 | 10.0.0.112 | 10.0.0.113 |

| vMotion | 10.0.1.111 | 10.0.1.112 | 10.0.1.113 |

| vSAN | 10.0.2.111 | 10.0.2.112 | 10.0.2.113 |

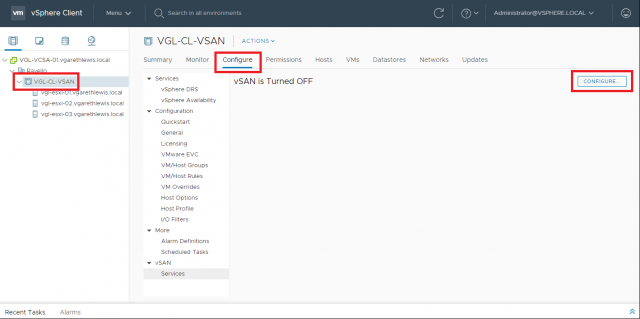

Installation

As mentioned at the start of this post, enabling VMware vSAN is amazingly simple. When queried just how simple, I jumped into my lab to check. From clicking the Configure button, vSAN can be enabled in 10 clicks (four of those clicks are used configuring cache/capacity disks). It really is that simple. So, let’s take a look at the process.

1. First of all, select your new vSAN cluster, browse to Configure, and click Configure… (our first click).

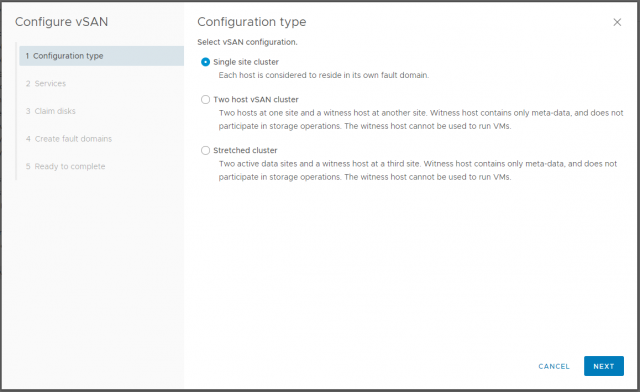

2. In this example, we will be configuring a single site cluster. As such, click Single site cluster and click Next.

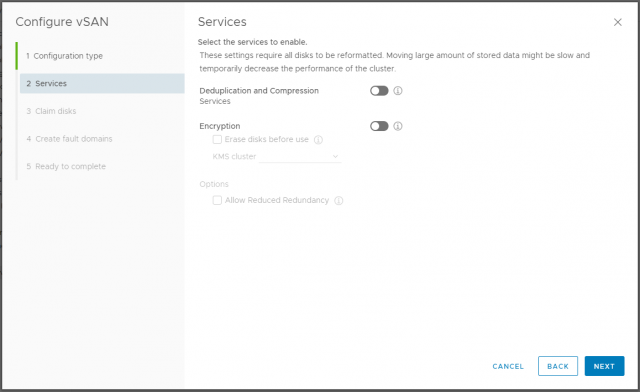

3. In this article we won’t be covering deduplication, compression, or encryption, however, you can see how easy these are to enable. Click Next.

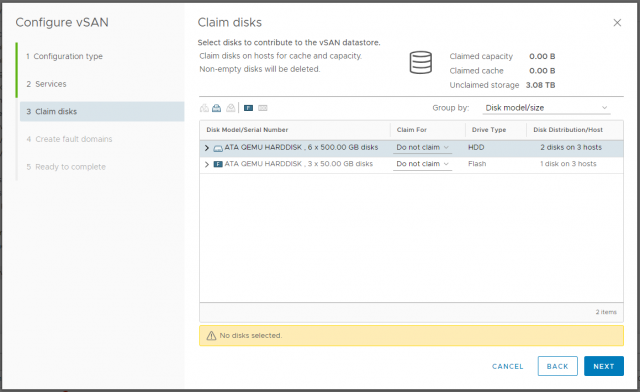

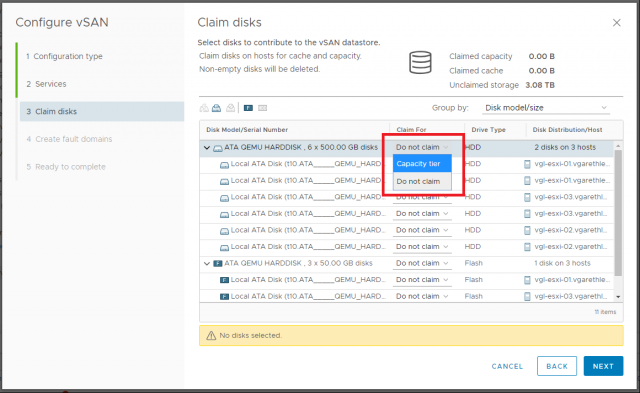

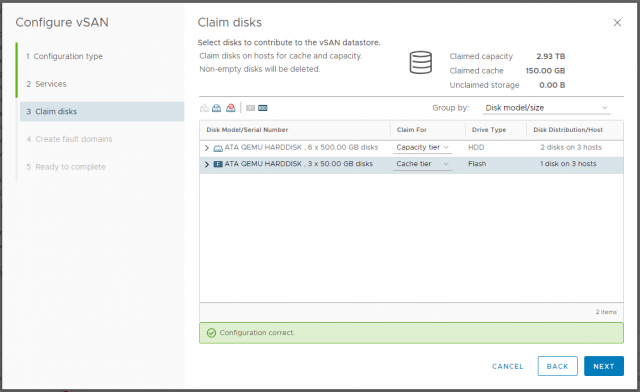

4. Next we will define our disk roles.

5. From the Claim for drop-down list, assign all 500 GB HDDs to the Capacity Tier, and all 50 GB SSDs to the Cache Tier.

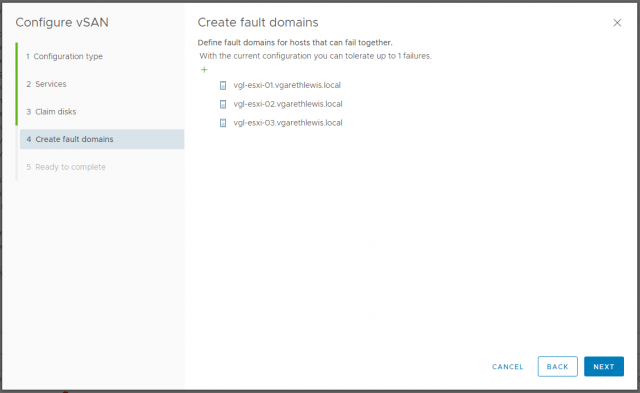

7. We will cover Fault Domains in a future post. For now, accept the default configuration and click Next.

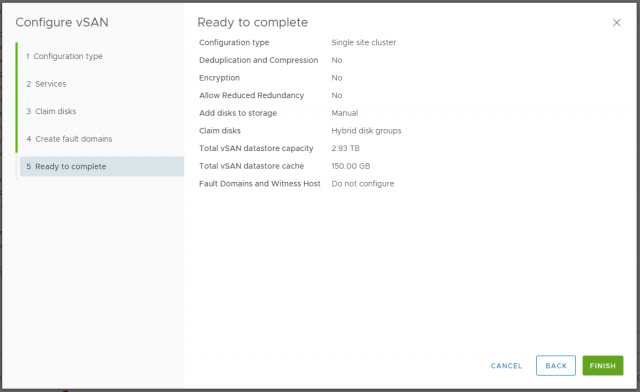

8. Review the summary and, when ready, click Finish.

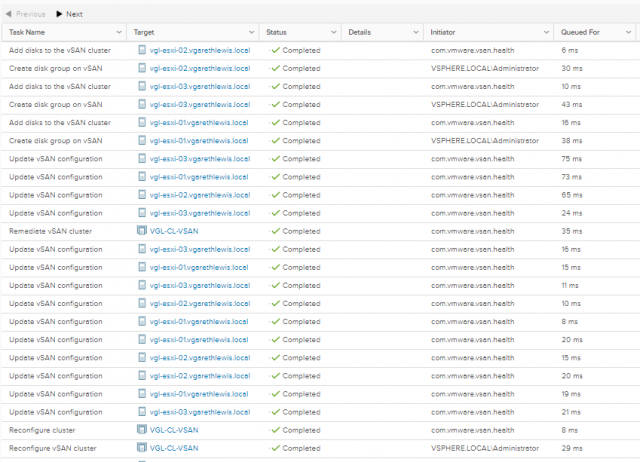

9. Monitor the vSAN configuration throughout the process.

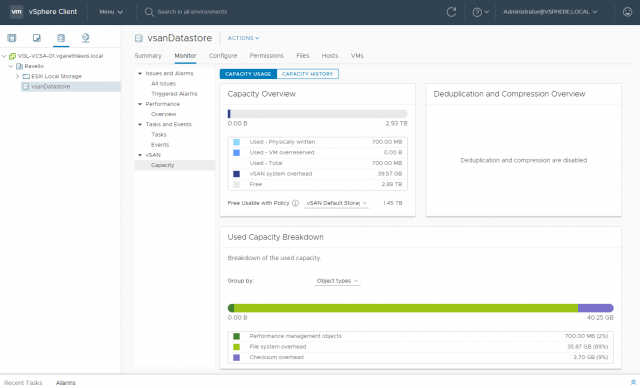

10. When the vSAN configuration is complete, browse to the Storage tab and note the creation of a new vsanDatastore. From here, you’ll be able to check capacity overview and breakdown.

Post-Installation Checks

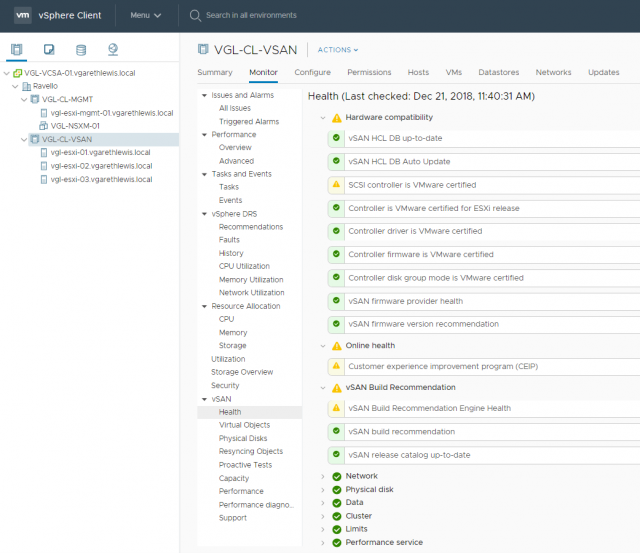

One of final item to check before placing any workload on the vsanDatastore, is the vSAN Health dashboard.

After selecting your vSAN cluster, browse to Monitor > vSAN > Health. Note, I’m not concerned with the three warnings below. As this is a nested/hybrid environment, I don’t expect a VMware certified SCSI controller; I have not enrolled in the CEIP, and to utilise the vSAN Build Recommendation Engine Health feature I have to login to MyVMware…which I have not. So, all explainable and, most importantly, I have no hardware issues and, instead, have a super healthy and beautiful baby vSAN cluster.

Conclusion

That’s it. In just 10 clicks we have enabled VMware vSAN. In our next post, and before we begin placing workload on our vSAN cluster, we will dive into vSAN Storage Policies, rules, and tags.

Leave a Reply