Reading Time: 5 minutes

One of the major upcoming projects this year will see the upgrade and possible redesign of our Exchange environment, and this will mean upgrading our current Exchange 2010 solution to Exchange 2013. With this comes a number of differences (the management GUI to name just one), and I aim to capture my initial thoughts of the product in this and upcoming posts. In future posts we’ll cover various topics including the creation of database availability groups (DAGs), load balancing, and general all round resilient goodness!

So, back in the home lab, the idea was to get a better feel for the product by building a lightweight demo solution, but one which still offers HA capabilities. As the Outlook Web App now offers near-Office 365 functionality, the need for multiple Outlook clients running on Windows OS’s in the lab is no longer the case; this means I am able to run one domain controller, a Client Access server, and two mailbox servers all on a single laptop running VMware Workstation. All VMs will be running trial versions of Microsoft Windows Server 2012 R2, and all will be housed on a Crucial BX100 250GB SSD for best performance.

Network Prerequisites

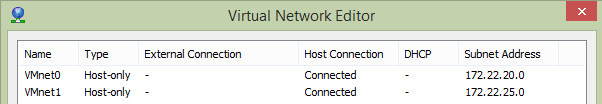

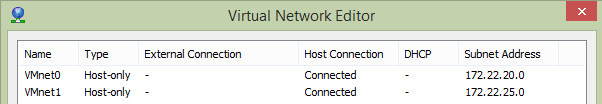

Before building any lab-based solution, I always start with the networking requirements, and the great thing about working with VMware Workstation means VLANs can be created and configured quickly and easily.

We’ll be using two VLANs in order segregate different traffic types; the first VLAN is for our server LAN traffic, and the secondary for Exchange replication traffic (more on the latter shortly).

- VMnet0 – VLAN 20 – LAN Traffic – 172.22.20.0/24

- VMnet1 – VLAN 25 – Replication Traffic – 172.22.25.0/24

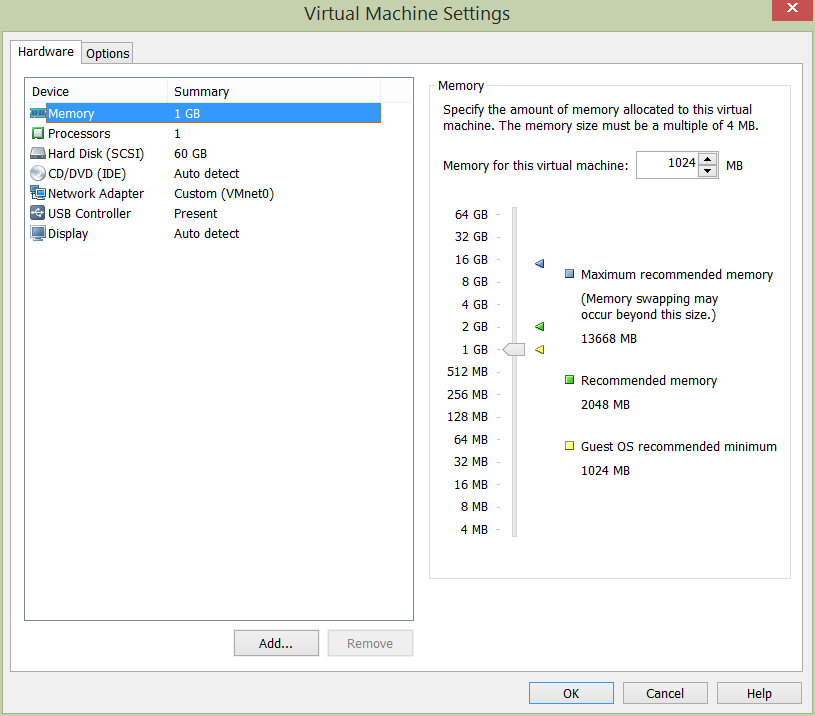

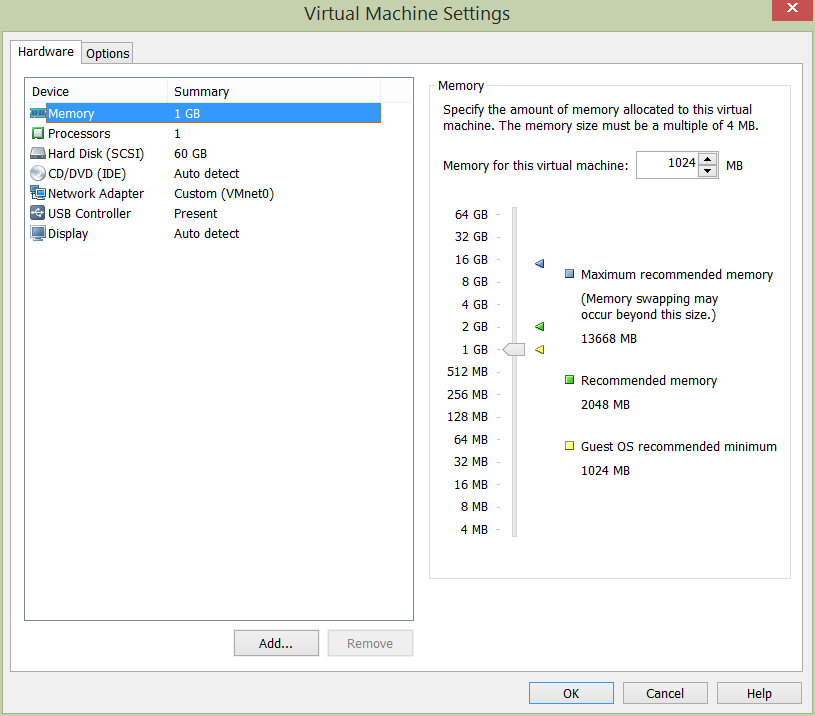

Domain Controller Configuration – ADDSV01

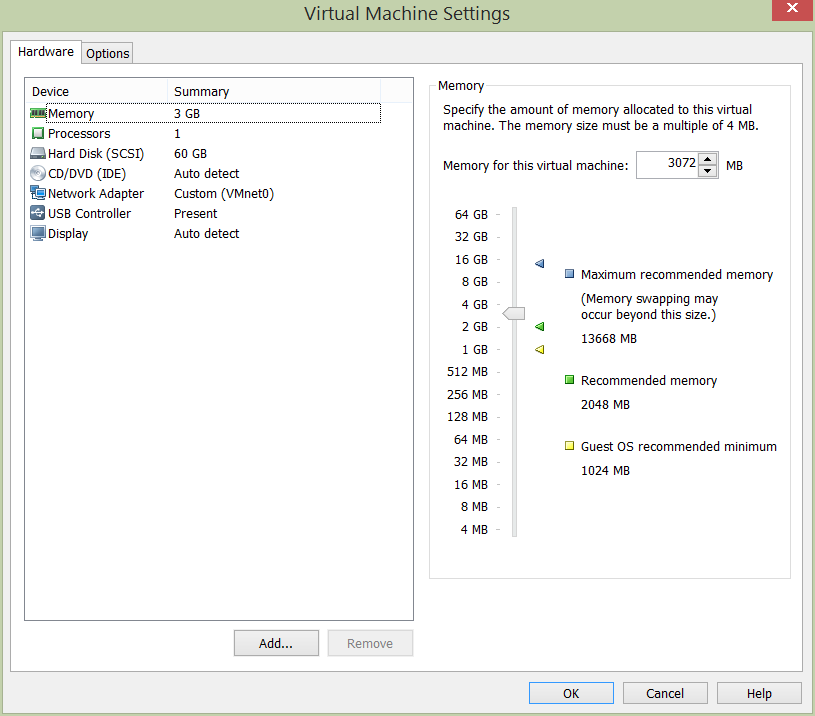

Client Access Server 01 Configuration – EXCAV01

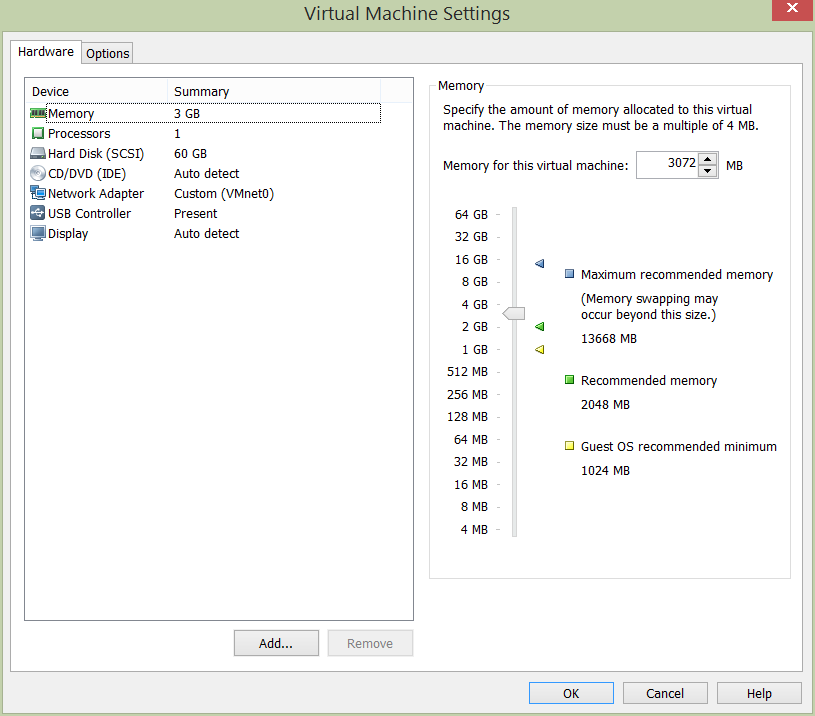

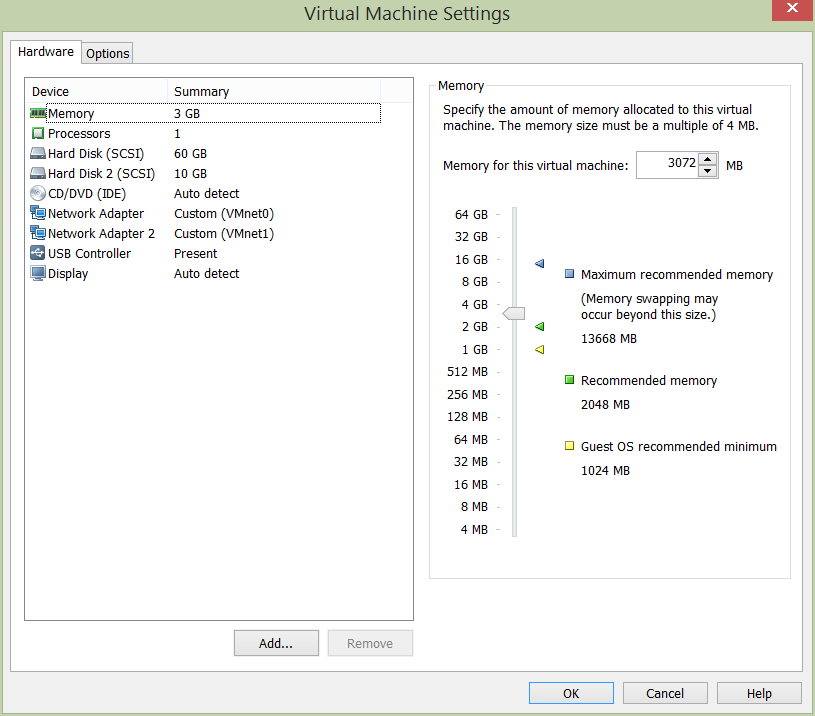

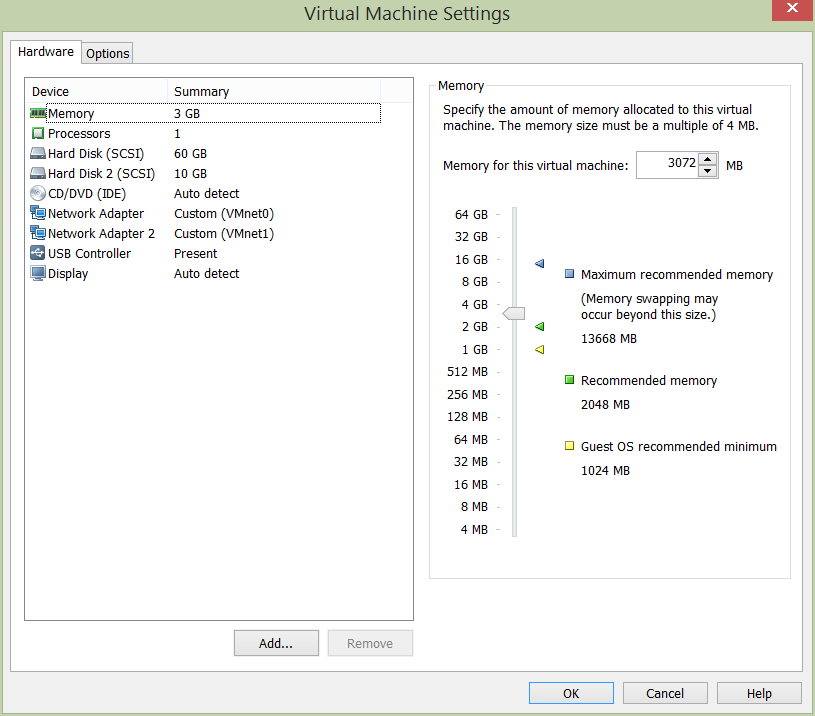

Mailbox Database Server Configuration – EXMBV01 & EXMBV02

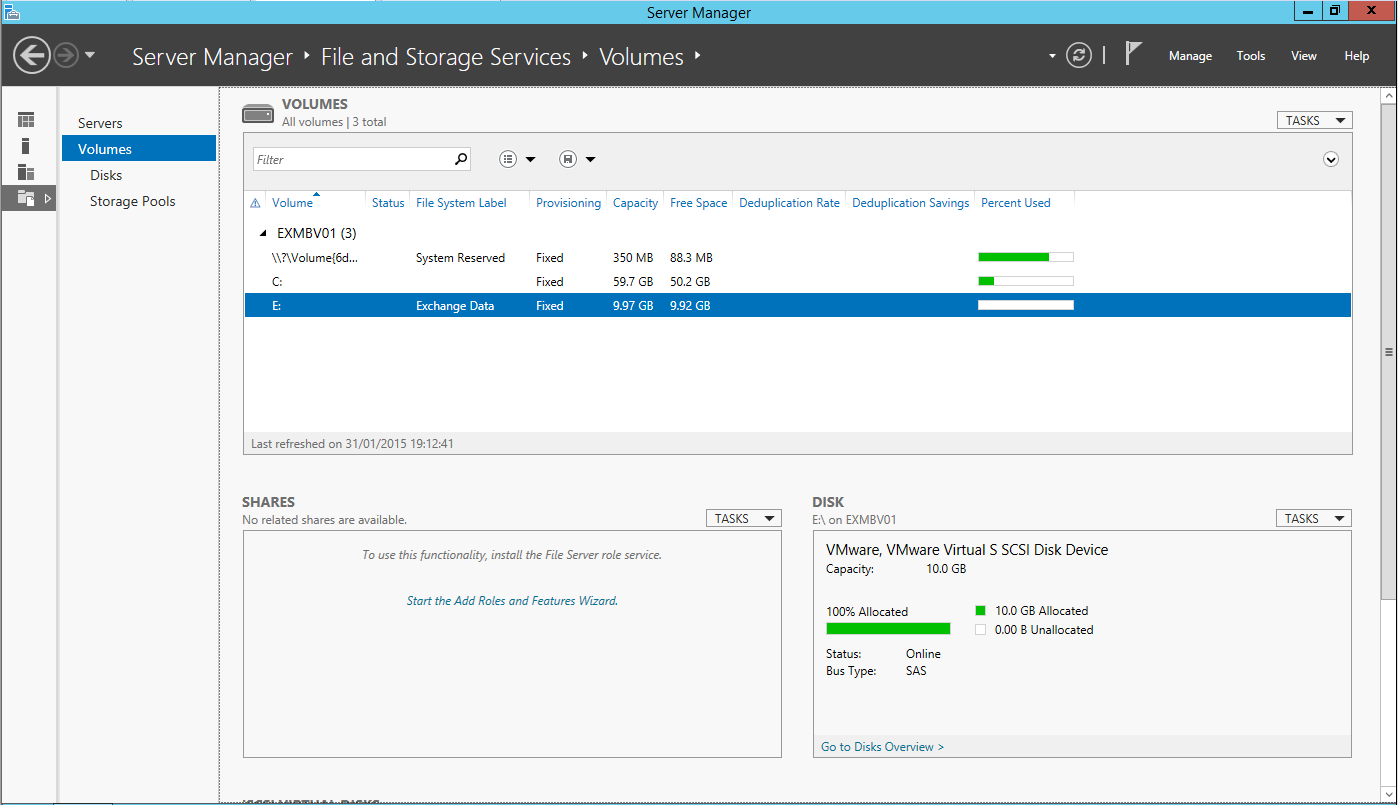

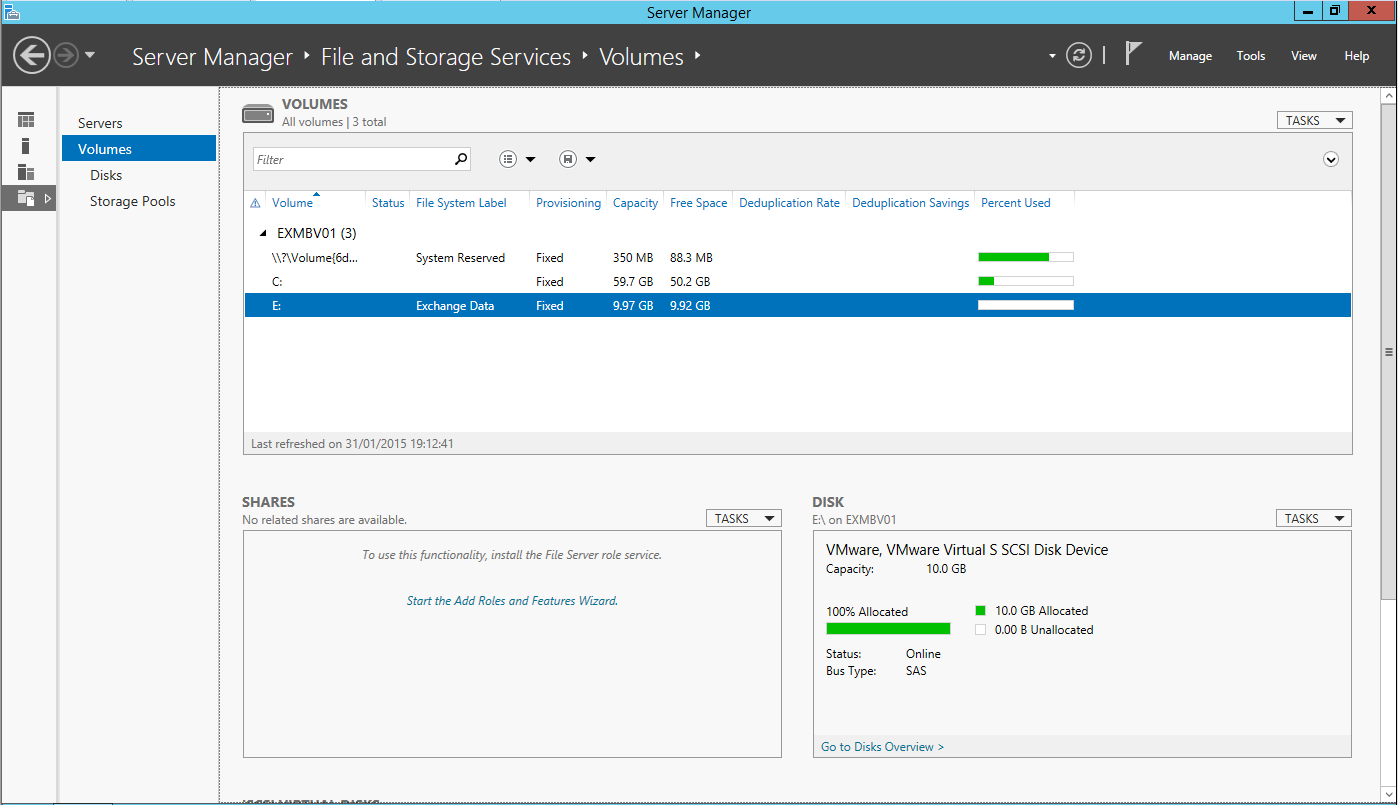

Mailbox storage requirements will see an additional 10GB disk added to each of the mailbox servers (as seen in the below VM configuration). The new disk will be used to house our mailbox databases. In production we would obviously add appropriately sized disks and span our databases across them, however, a single thin-provisioned 10GB disk in each of our mailbox servers will be perfectly acceptable for a lab environment.

In the below screenshot I’ve brought the new disk online and created a volume accordingly:

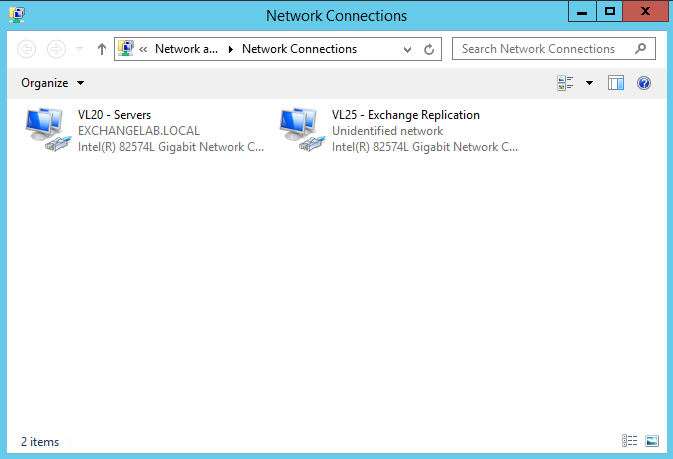

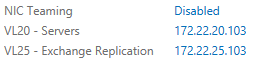

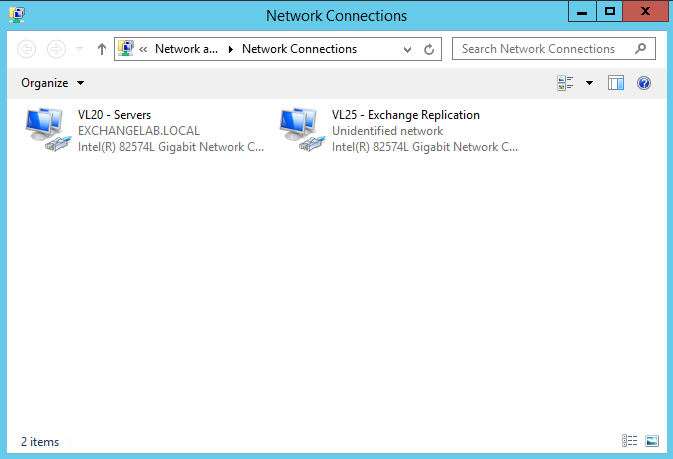

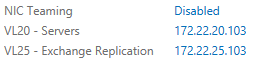

Lastly, we’ll be adding an additional NIC to each of the mailbox servers. I’ll be covering this in depth in my next post when I configure a database availability group (DAG); specifically, the NICs will be used to segregate replication traffic from our production network. Below shows our newly added NIC for replication traffic. The additional mailbox server NICs will be configured as such:

- EXMBV01 – 172.22.25.102/24

- EXMBV02 – 172.22.25.103/24

Note, a requirement of Exchange replication means the new NIC must have no gateway, no DNS servers, and DNS registration must be disabled (see below IP configuration for EXMBV02). Ensure the relevant fields are left blank and disabled.

Windows Server Manager now shows our NICs as below (EXMBV02):

Installating Microsoft Exchange Server 2013

Now our prereq work is complete, we can move on to the actual installation.

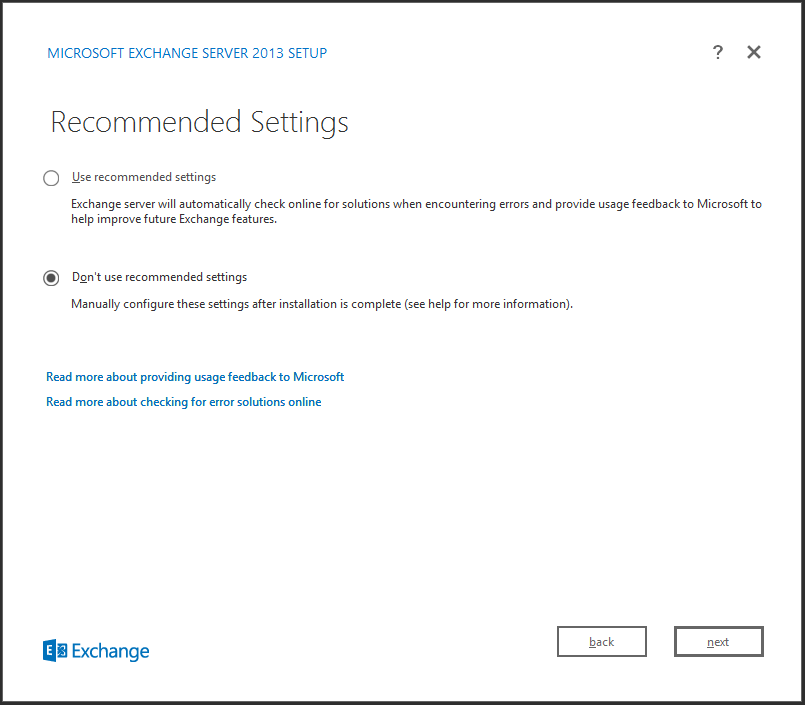

Once the ISO has been loaded and its pre-installation checks complete, installation across the three servers was very easy. For resilience, Client Access and Mailbox roles were segregated onto their own VMs. Installation of the Client Access role onto EXCAV01 took just 10 minutes, with the Mailbox role installing on EXMBV01 and EXMBV02 even quicker. All in all, the three roles were installed in just under 45 minutes. Not bad at all; but this is where flash storage comes into its own.

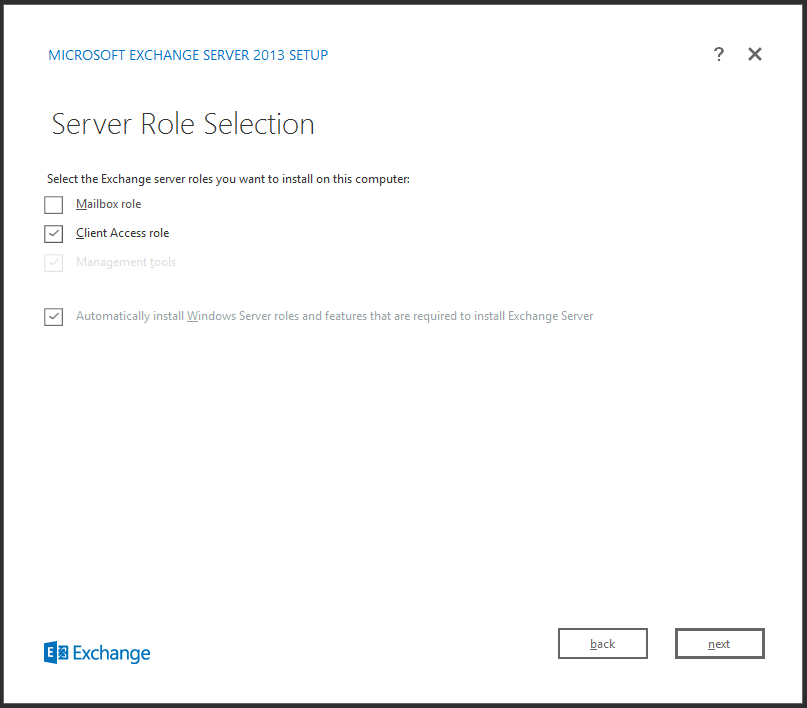

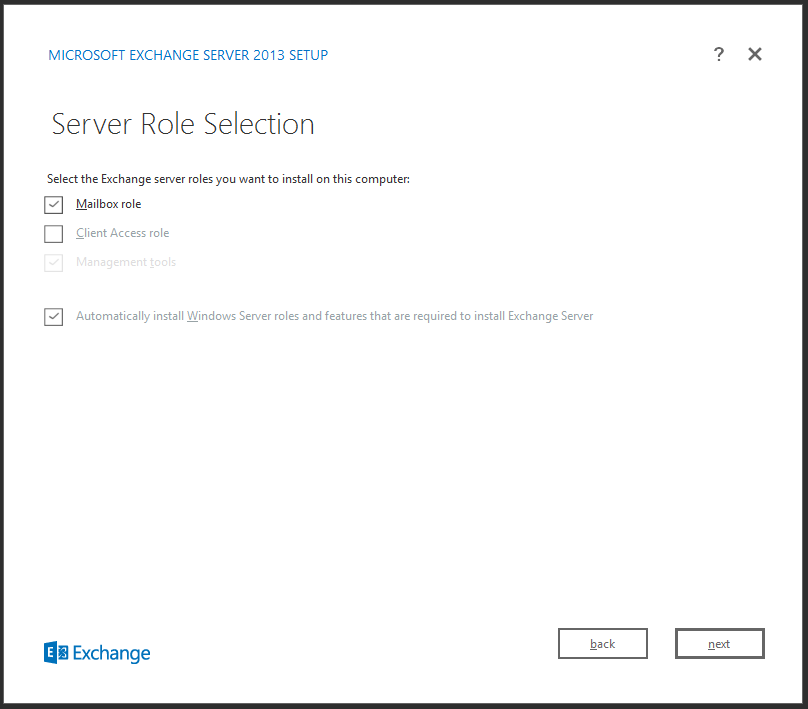

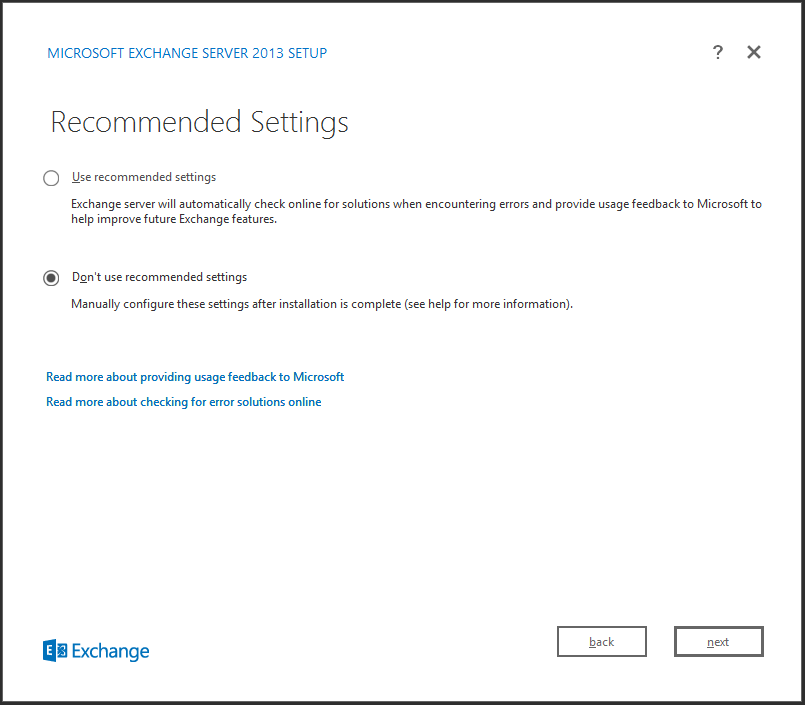

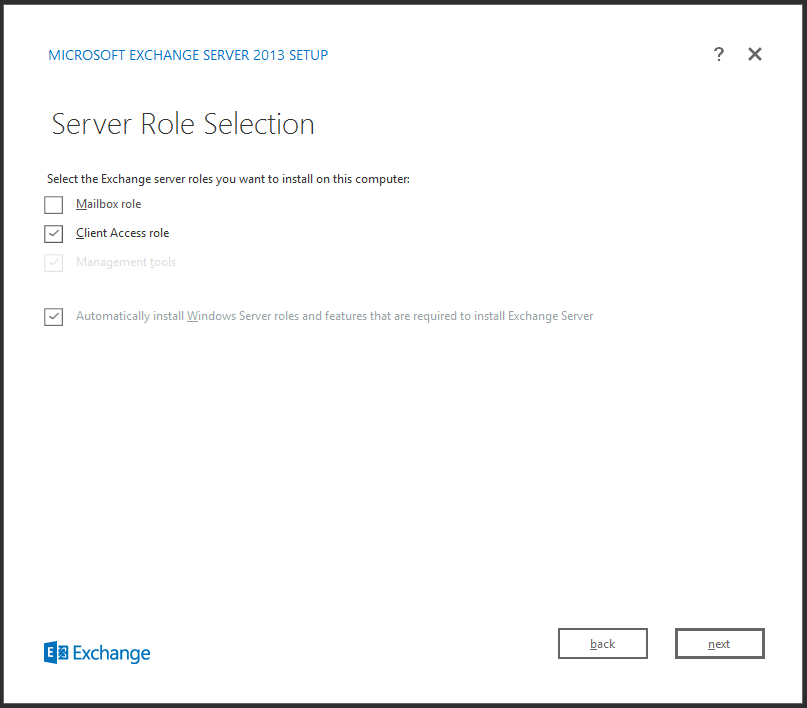

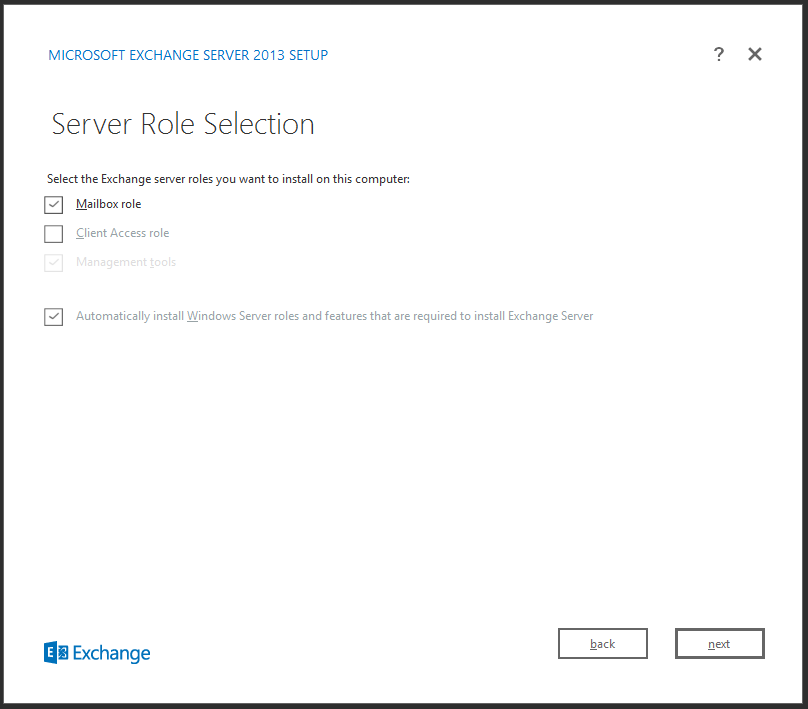

The below screenshots show just how ‘clean’ the the 2013 installation process is and, to ensure we install only the roles we require, ensure you select ‘Don’t use recommended settings’:

For the Client Access role, we select the relevant option for EXCAV01:

…and likewise for the Mailbox role on both EXMBV01 and EXMBV02:

Following the readiness checks, installation proceeded without any fuss.

Post Installation Checks

And that’s it. If everything always went this easily, it’d be an easy life!

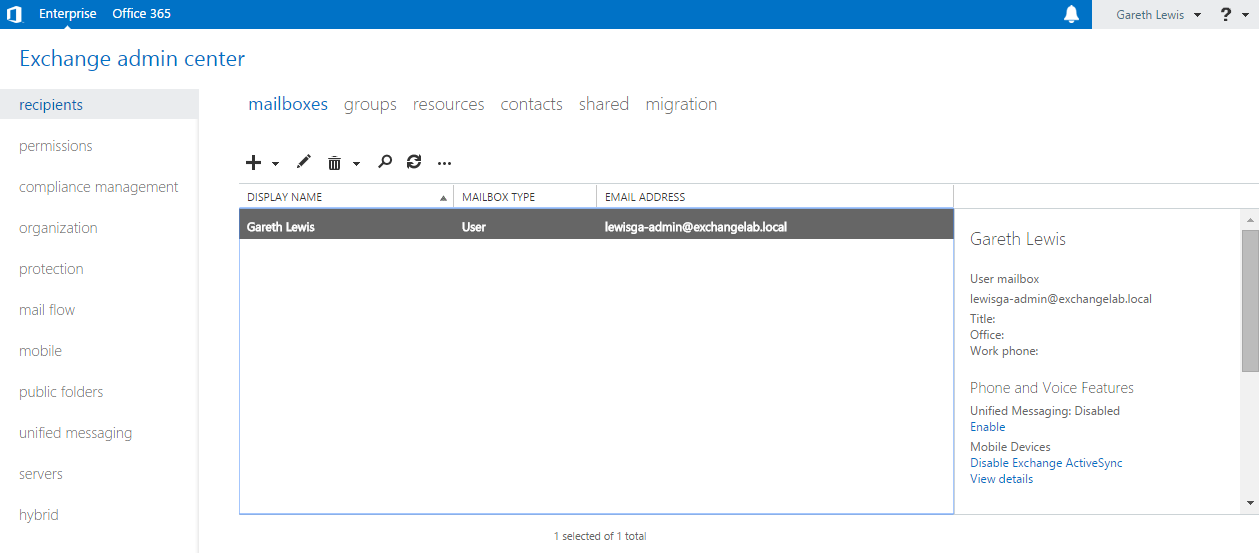

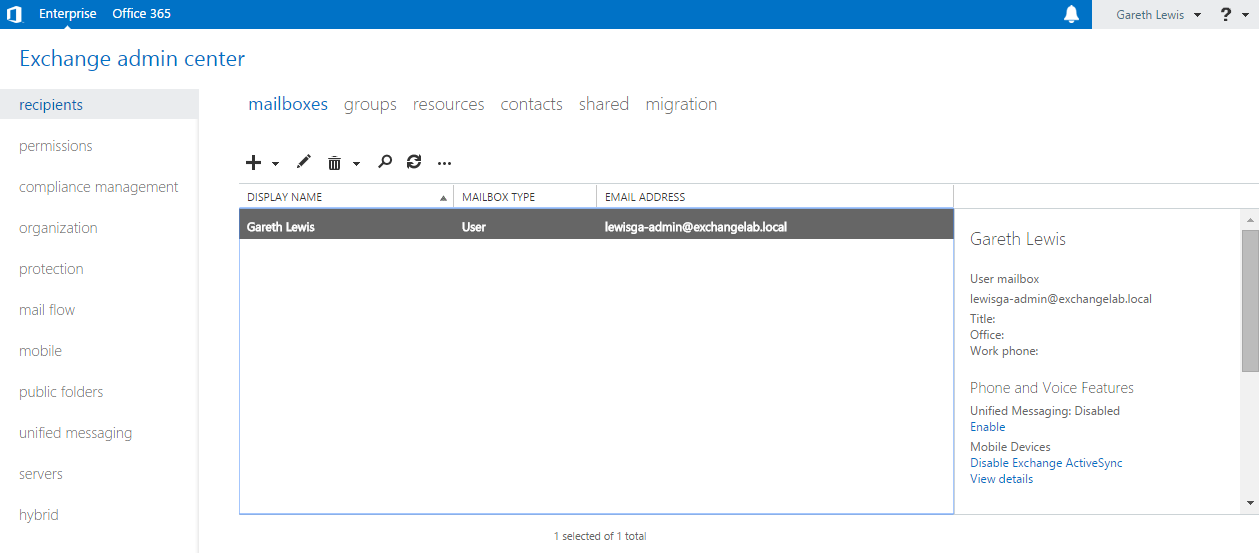

Following the installation of the Mailbox roles we’re now able to login to the new Exchange Control Panel. Unlike previous versions, this is now solely web based, and so allows us to login from anywhere on the network:

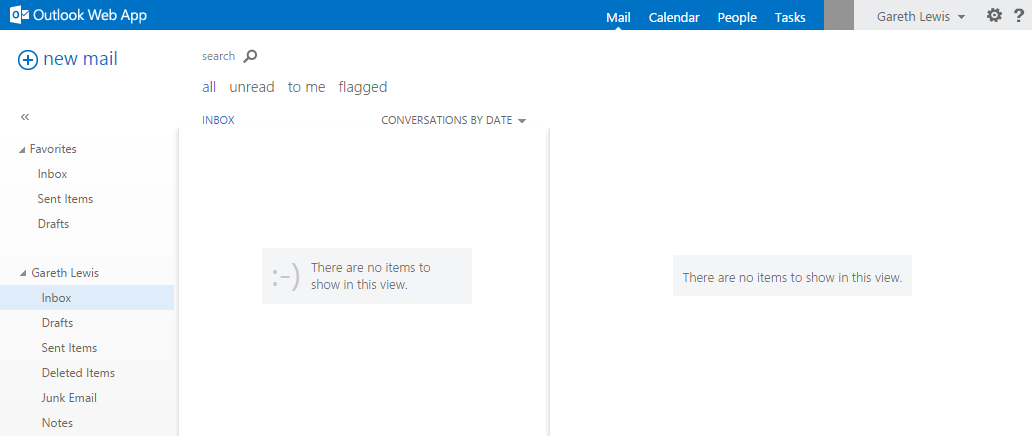

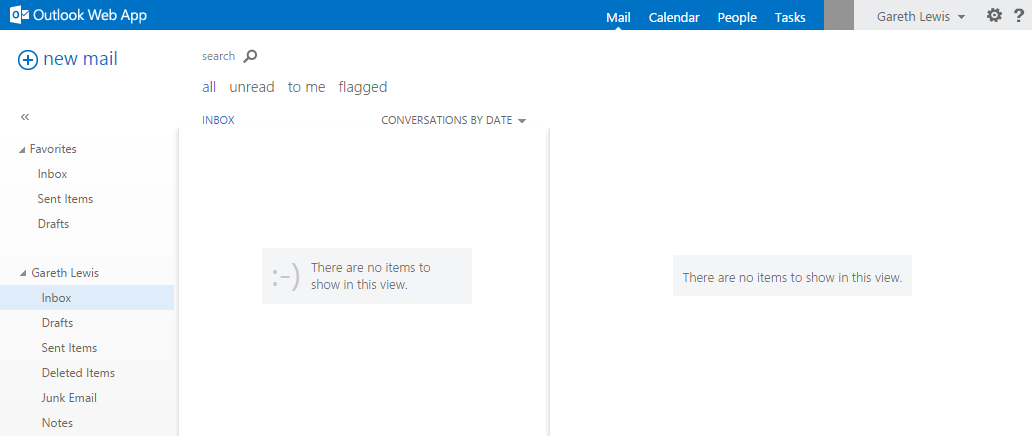

Likewise, we’re also able to login to the Outlook Web App, again, from anywhere on the network:

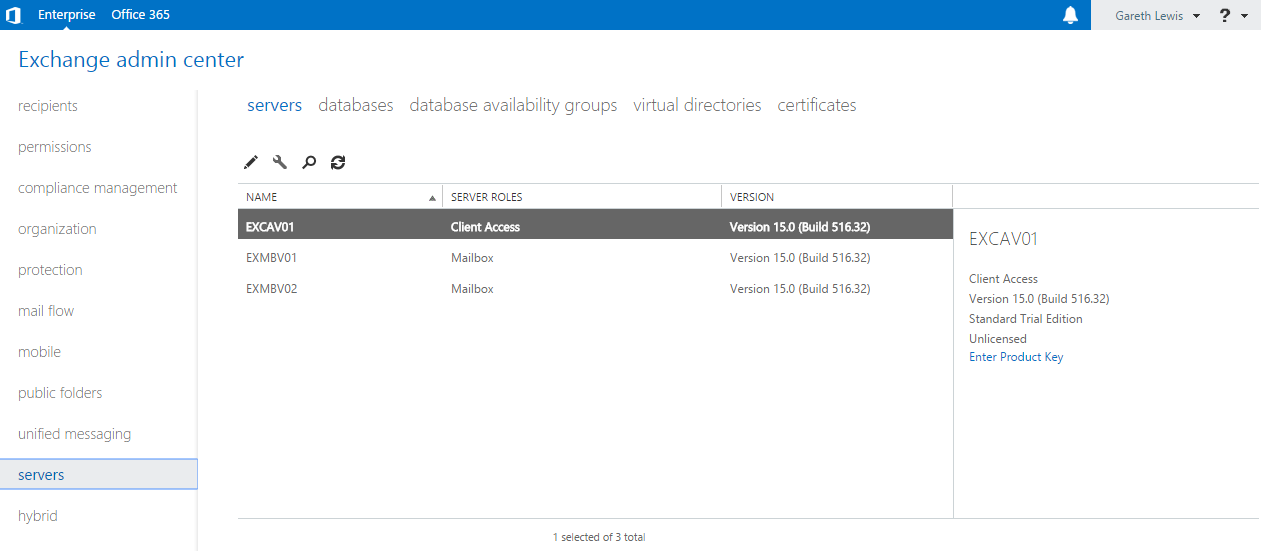

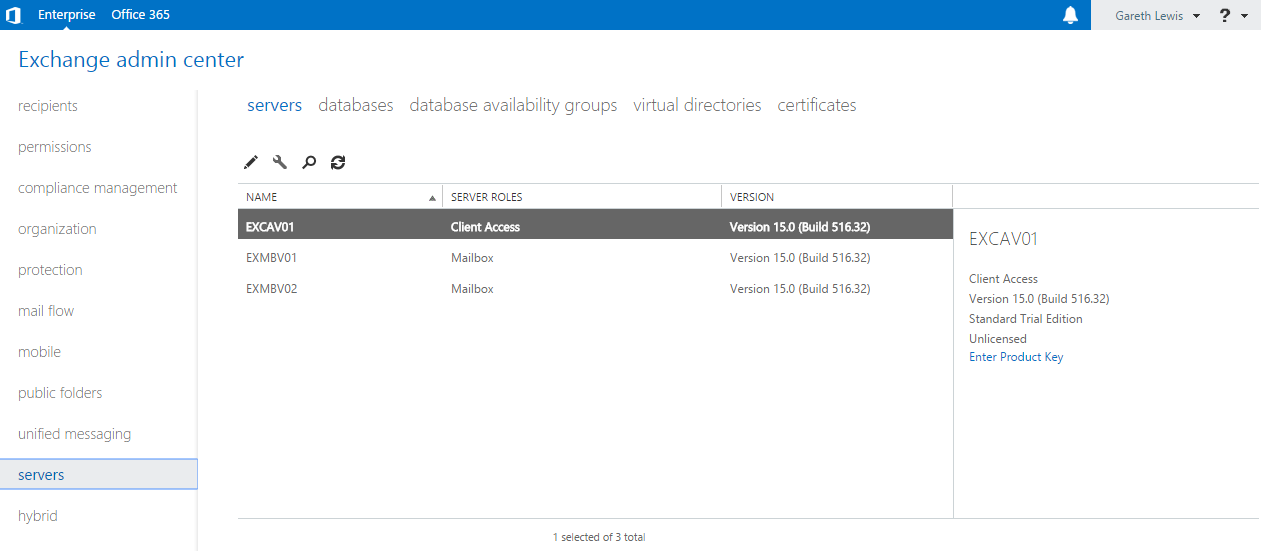

Browsing to ‘Servers > Servers’, we see that our three newly built Exchange servers are displayed, each with it’s role clearly indicated:

Final Task – Ensuring Database Health

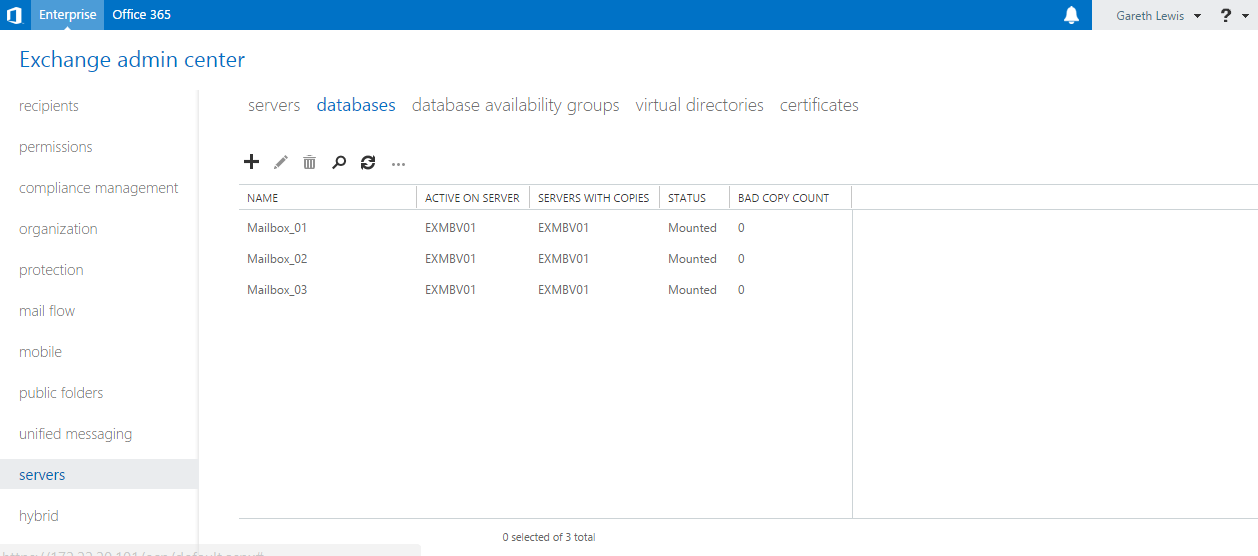

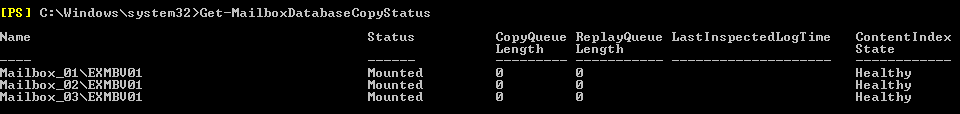

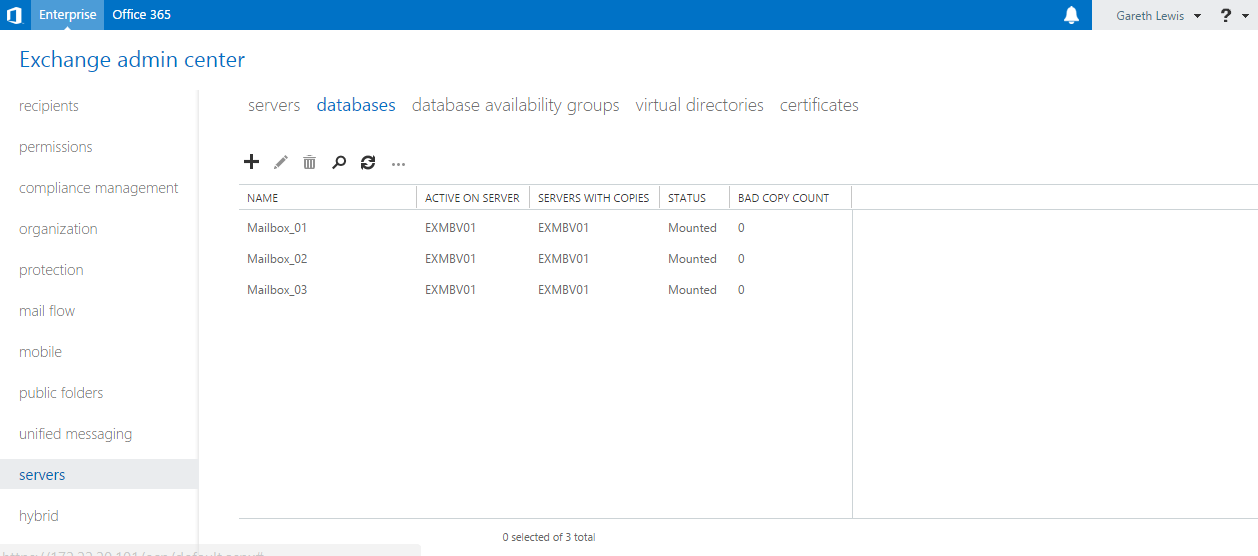

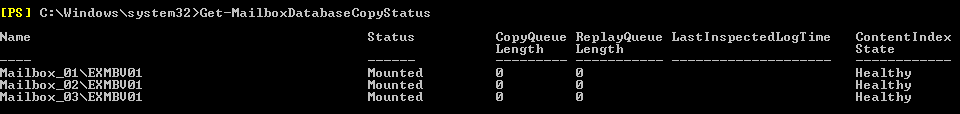

Our last task is to ensure that our databases are mounted and in a healthy state. This can be confirmed by a) browsing to ‘Servers > Databases’, or b) via the Exchange Management Shell by running the cmdlet ‘Get-MailboxDatabaseCopyStatus’:

From the above screenshots, we see the three new databases I have created, all of which are housed on one of our Mailbox servers (in this case, EXMBV01).

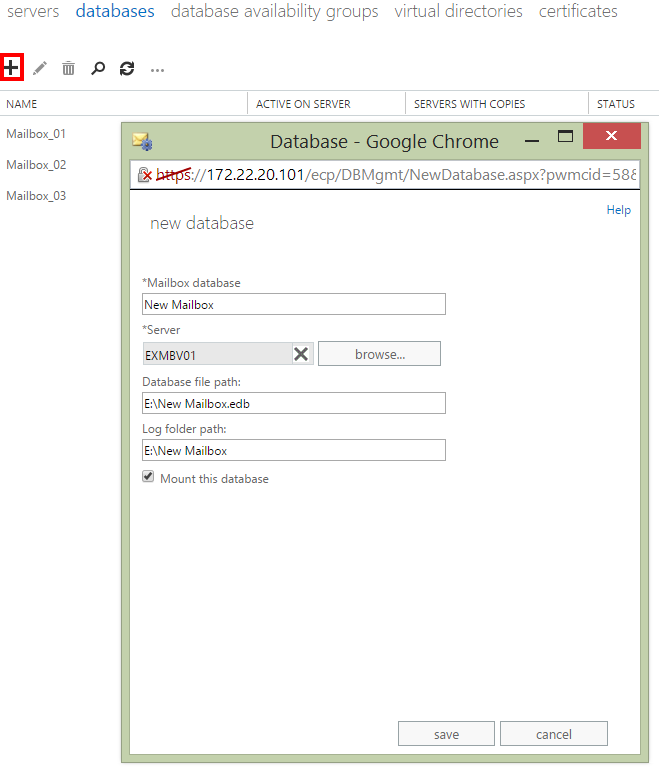

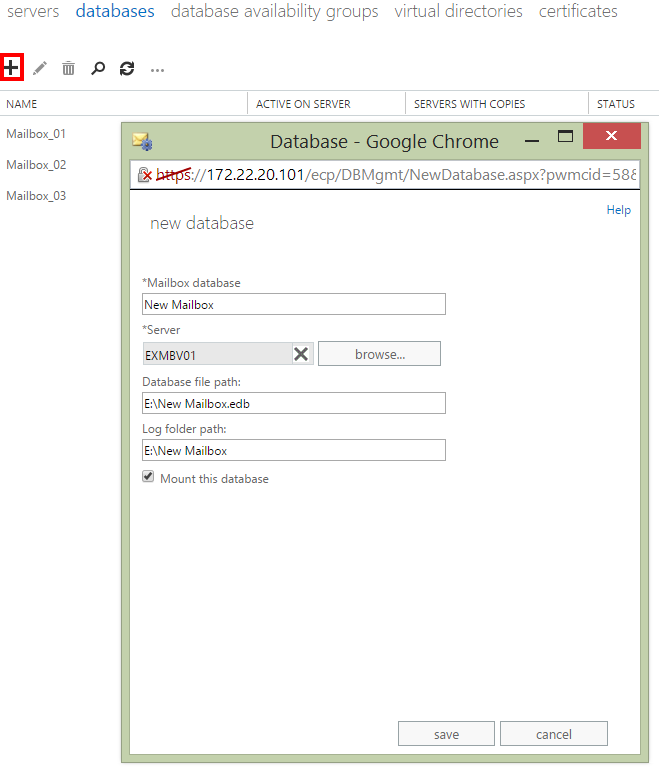

To create a database, simply browse to ‘Server > Databases’, and click the ‘+’ symbol:

Simply give your new mailbox a name, select one of the new mailbox servers on which to store it, and set the file path (for which we need to point at the secondary 10GB disk).

And that is it. Mail is now flowing nicely and, in the next post, we’ll look at enabling replication between the mailbox servers in order to ensure resilience in the event of a server/network failure.

See you in the next post…